Chris F. Westbury

Tuesday 30 July 2019

On Michelangelo Merisi da Caravaggio and me

Thursday 27 June 2019

I'm Not Going to Begin to Think: A Farewell Ode to Sarah Sanders

I used Janusnode's Markov chaining function to process some quotes by Trump's stunningly incompetent press secretary, Sarah Sanders, and made the poem below by hand-selecting from the Markov chain output.

I'm not going page by page, talking about a hypothetical this.

I'm not going to negotiate and I can't quickly do the back-and-forth.

I'm not looking at every facet.

I'm not going to draw out an org chart.

Quiet.

Fear is the most basic motivation.

I'm not going to begin to think.

I'm not going page by page, talking about a book that's complete fantasy and just full of tabloid gossip, because it's sad, pathetic, and our administration and our focus is going to lay out a timetable.

Quiet.

I'm not going to lay out a timetable.

I'm not going to begin to think.

I'm not going to get into the weeds.

I'm not going to get ahead of anything that may or may not have happened over the course of the ticktock and that I can't quickly do the math on.

Quiet.

If you want to call yourself ignorant, I'm not going to argue.

It's important people sexually are responsible for what they do unless they are important people.

I'm not going to be a mother.

I'm not going to begin to think what you think.

Quiet.

I'm not going to get into the process of using words. That is called a degenerate sentence.

I'm not going to get into the process ticktock.

I'm not today or tomorrow, or at any point ever, going to comment on Sebastian Gorka.

I'm not going to comment any further than I already have.

Quiet.

You have to hurt others to be extraordinary.

I'm not going to speculate on where we are.

I'm not going to begin to think.

I'm not going to begin to think.

I'm not going to begin to think.

I'm Not Going to Begin to Think:

A Farewell Ode to Sarah Sanders

I'm not looking at ever the course of the tick tock.I'm not going page by page, talking about a hypothetical this.

I'm not going to negotiate and I can't quickly do the back-and-forth.

I'm not looking at every facet.

I'm not going to draw out an org chart.

Quiet.

Fear is the most basic motivation.

I'm not going to begin to think.

I'm not going page by page, talking about a book that's complete fantasy and just full of tabloid gossip, because it's sad, pathetic, and our administration and our focus is going to lay out a timetable.

Quiet.

I'm not going to lay out a timetable.

I'm not going to begin to think.

I'm not going to get into the weeds.

I'm not going to get ahead of anything that may or may not have happened over the course of the ticktock and that I can't quickly do the math on.

Quiet.

If you want to call yourself ignorant, I'm not going to argue.

It's important people sexually are responsible for what they do unless they are important people.

I'm not going to be a mother.

I'm not going to begin to think what you think.

Quiet.

I'm not going to get into the process of using words. That is called a degenerate sentence.

I'm not going to get into the process ticktock.

I'm not today or tomorrow, or at any point ever, going to comment on Sebastian Gorka.

I'm not going to comment any further than I already have.

Quiet.

You have to hurt others to be extraordinary.

I'm not going to speculate on where we are.

I'm not going to begin to think.

I'm not going to begin to think.

I'm not going to begin to think.

Saturday 25 August 2018

On things vile and fantastic

I came across a silly thing on FaceBook that asked people to use their initials to mix three words to make their Metal-band name, or something like that. Basically it was rule-based text generation at human speed. In the middle of the night I thought it would be amusing to port it to JanusNode and pump it up a little. The output included concepts so vile that I felt like it needed a unicorn chaser, so I also made word lists for super-positive concepts. Here are a few samples of each.

The vile:

Hideous vulva canings

Icky eye socket peeing

Pornographic floozie electrocution

Unappetizing umbilical cord haemorrhaging

Rotting baby execution

Smelly belly buttocks

Creepy perineum dismembering

Bubonic tonsil herpes

Poopy boner traumatizing

Detestable assault reptile

Defecated baby puke

Pus-filled pig fetus

Decomposed ex-boyfriend disembowelment

Putrid fetuses roasting

Terrible anal gunplay

Tumorous wombat masturbator

The fantastic:

Majestic blooming love

Scintillating friendship celebrating

Mesmerizing togetherness blooming

Lovely love joy

Electrifying sexual playfulness

Awesome son fabulousness

Commendable dancing creativeness

Unfathomable couple blessedness

Shimmering lasagna glee

Beguiling delicious kindnesses

Mind boggling lingerie amazement

Perfect chocolate cake friendships

Stupendous beautiful generosity

The wonderful playland of adoration

Delicious revelry champagne

Astounding pancake peacefulness

Saturday 10 March 2018

On nazokake

I recently learned about the Japanese riddle form called a nazokake, which is built around a homophone pair. The structure of a nazokake is something like:

How is an X like a Y?

They both have to do with [homophone] Z.

The nice thing about these riddles is that they are very easy to automate (I was not the first to notice this: e.g. see here and here). I found a list of homophones online, and generated their semantic associations using the word2vec model, which bootstraps semantic association from patterns of text co-occurrence. Then I wrote some simple rules for my text generation program JanusNode, to get it to generate riddles of this form. Here are some samples of what it came up with. Enjoy.A dividend is like an odor because they are both about cents (scents).

How is a drumstick like an anthem? They are both about cymbals/symbols.

How is a maternity ward like a sports playoff? They both make me think of births/berths.

How is a goalpost like a cry? They are both related to balls (bawls).

How is a game of billiards like a trolley? They are both about cues/queues.

How is a missile like a daughter? They both have to do with the air (the heir).

How is a commander like a CPU? They both make me think of colonels/kernels.

A stockade is like a waddle because they both have to do with a gate (a gait).

Thursday 19 October 2017

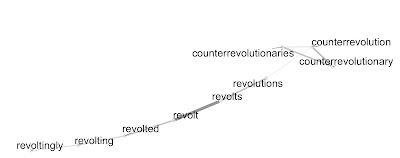

On finding phonesthemic forms

Although

word form and meaning are logically distinct, there are several ways that form and

meaning can become correlated in language. When words have correlated form and

meaning for no obvious reason, we call them phonesthemes. The classic example

is the words beginning with gl that all have to do with a short

intense visual experience: glossy, glow, gleam, glisten, glitter, glitz, glint,

glow, glimmer, and glassy. Another example is the set of sl words that are associated

with a deficit in speed: slow, slump, slack, sluggish, slothful, sleepy, slumber,

slog, slouch, slovenly, and slack.

It turns

out there are many such pockets of arbitrary form-meaning correspondence,

especially if we loosen the criteria and look for words with related meanings that share a

letter sequence anywhere, instead of just at the beginning. For example, there

is another gl word associated with a short intense visual experience that no one ever mentions as part of the glitzy family: the word spangle. As another example, a lot of words semantically related to incarceration contain the bigram ai: along with the word

I’ve been

exploring this professionally a little and I will summarize my findings in another blog post

if those findings ever get through peer review. For now I just want to share some ‘found

poems’ from my exploration. I created graphs that connected words that shared both form and meaning. Some elements of those connected graphs seemed to function almost as little

poems, so I have collected a few choice examples for you to enjoy (perhaps). I had a few arbitrary rules, of course, because everything is more fun with arbitrary rules. One rule was that I could erase connected words, but I could not add any. Another rule was that I could not change the location of any word networks, although it was arbitrary in the graphs I made: only networks that actually occurred together could be shown together.

–––––––––––––––––––––––––––––––

The circle of worry

–––––––––––––––––––––––––––––––

Monday 17 July 2017

On thought cancer

I am moved to muse on this topic, as I have been moved to several others on this blog by a production from my user-configurable dynamic textual projective surface, JanusNode, which recently produced the question: "Would you prefer to die of suicide with a group of others, or of thought cancer in a factory?" I had not previously heard the term 'thought cancer', and, it seems neither has anyone else. Googling it returns hits to 'throat cancer', and forcing the quote mostly gets sentences starting with "I thought cancer was...". The one hit for the term as JanusNode used it was an entry in the Urban Dictionary, which is not always a reliable source. It defines 'thought cancer' as "The adverse effects one endures mentally from over-thinking things; usually secrets, inside information, or just the side effects of an over-active imagination."

This is OK as far as it goes, but if cancer is a systemic error to which many independent biological systems are subject, I think there is a less metaphorical meaning for thought cancer: the unchecked growth of a thought. Many thoughts are cancerous in this sense: they are self-increasing. I had a friend when I was in university who was very sure that strangers were saying bad things about him when he passed them on the street. I could not understand why this otherwise-rational man would think this, until I did an experiment. I walked down Saint Laurent Street in Montreal, taking on exactly the same assumption. I was amazed at how easy it was to find evidence for the idea that people were saying bad things about me. I caught snippets of many conversations which involved people deriding...someone. Why not me? After all, it has been recognized since Aristotle's time (even though David Hume tried to take credit for it) that consistent contiguity in time and space looks like causality. If Event B occurs just after Event A often enough, we naturally assume that Event A caused Event B. If people are often using the word 'asshole' just after I pass them, it must be because my presence caused them to use it. My friend's delusion was just his brain doing business as usual. The problem was the more he believed it to be true, the more evidence he found for it, and the more he obsessed over it. Thought cancer.

This error of self-referentiality is really just a self-fulfilling prophecy, a well recognized mental error in Cognitive-Behavioral Psychotherapy. Many other psychological symptoms can have a similar underlying cause, most notably obsessive-compulsive symptoms. If I believe that I need to step into my house after a number of steps divisible by seven or terrible things will befall me, then I am going to get a lot of positive feedback for that belief. I always step into my house after a number of steps divisible by seven, and terrible things don't befall me! Phew. I dodge a bullet, again and again.

I believe the Web is now enabling thought cancer at a societal level. It does so has made because the Web has made it possible to tailor our news sources exactly to our beliefs, so we will only hear from people who think like we do. The result is the echo chamber of social media: we believe that everything thinks like we do because we have arranged things so that everyone we come into contact with does think like we do. This is the most dangerous thought cancer, because it leads to increasing isolation, which leads to increasing evidence that we are right in our beliefs. In a cancerous tumour, growing cancer begets cancer. Belief systems grow just like tumours, to the extent that we now have, where the two centre-right parties in the United States (i.e. the Republicans and Democrats, both of whom are far too right-wing to, e.g., have a chance of getting elected in my own native country of Canada) have somehow come to believe that they define incommensurably opposing views, rather than occupying a very narrow band of the political spectrum as they do.

Cancer treatment is very brutal, involving two main paths: excise the cells that are dividing too much (surgery), and then (usually) poison the patient to take her as close to death as possible without actually killing her (radiotherapy and chemotherapy) in order to kill all of the most rapidly-dividing cells: in this case cancer cells and hair cells (among others).

For the thought cancer that we now can now diagnosis– especially but not only in the United States– cutting out the tumour is hardly a viable treatment. America can't outlaw (excise) the Democrats and the Republicans (what else is there?), any more than it can outlaw the Internet that has allowed the two parties to to build increasingly stable, increasingly distinct, and ever-growing echo chambers.

The USA has now embarked on the only remaining option, the chemotherapy of its societal thought cancer. It is going to take itself as close to death as it can go without actually dying. Donald Trump is societal chemotherapy, the agent that will save the patient only by almost killing her. We don't need to admire Trump for this, anymore than we admire other poisons. But America's thought cancer has reached a near-terminal stage. The patient needs to take the poison if it is to survive, even knowing that the poison is going to make her vomit, moan for months, pull out all her hair, and degenerate into a long period of unproductive wasting. When it is all over, the uncontrolled growth of the cancer will be checked (through the destruction of the very narrow view of the political band that currently passes as reality due to the thought cancer) and the patient can return to her former glory.

Trump is not the only reason for the societal cancer; he is just a proximate cause. A good overview of the causal chain that led to Trump serving his poisonous role is given in Jonathan Rauch's (July 2016) Atlantic article, How American Politics Went Insane. I recommend it.

[On a related topic, see also my musings On The Narcissism of Small Differences.]

Tuesday 7 March 2017

On Writing Bad Poetry On Unfortunate Topics For My Children

My children and I used to write poetry for each other occasionally. Here are two pieces of doggerel I wrote for them.

The first one came from being limited by my son Nico to write about one of the first five topics that came up randomly on Wikipedia. My very first topic was "Glutamate dehydrogenase 2, mitochondrial, also known as GDH 2, [...] an enzyme that in humans is encoded by the GLUD2 gene". Although this did not seem a promising topic for a poem, it was better than the next four (Lindmania stenophylla, The Manitoba Day Award [now sadly deleted from Wikipedia], The Sunshine Millions Dash [also now sadly deleted from Wikipedia], and OMB Circular A-123, a "Government circular that defines the management responsibilities for internal controls in [American] Federal agencies") so I wrote an ode to GLUD2.

My daughter Zoe chose not to limit me at all and asked for a poem on any topic in any style, so I wrote a poem for her about not being limited by rules.

Enjoy.

-----

Ode to GLUD2 [For Nico]

An enzyme is one wondrous way

That miracles occur each day.

Each enzyme serves to catalyze

The slow reactions that arise

Inside our bodies; and without

Their helpful work there is no doubt

That life would not exist at all!

Life calls for speed; they heed the call.

And who was it that made that call?

Some call it ‘Chance’ some call it ‘All’;

Some call it ‘God’ but all we know

Is something called to make life so.

And why should we not worship it,

That what’s-it-called that made things fit?

If there’s no God, are mysteries solved?

Are enzymes crap if they evolved?

If you need proof that life’s divine

Then chemistry should suit you fine:

I say that no one ever knew

A thing as lovely as GLUD 2.

-----

On Playing the Game [For Zoe]

You said that I could write in any style:

So I thought: Free verse! But after a while

I thought that things work better with some rules.

I don’t say that all anarchists are fools,

But the world’s big! To focus your view

It helps if there are rules guiding you.

If soccer was played just any old way

I don’t think it would be as fun to play

As it really is: Who would shoot to score

If the goal was moving around or

If some players could use a hockey stick?

I don’t play soccer but I think the trick

(maybe not just there, but in poems too)

Is that masters of the game are those who

Learn to love the rules. So I wrote in rhyme:

Maybe I’ll do free verse another time.

Wednesday 4 January 2017

On How Many Words We Know

How many words does the average person know? This sounds like it should be an easy question, but it is actually very difficult to answer with any confidence. There are a lot of complications.

One complication is that is it not easy to say what it means 'to know' a word. Language users can often recognize many real words whose meaning they cannot explain. Does merely recognizing a word count as 'knowing' it? If not, if we have to know what a word means to count it, how can we decide what it means to 'know the meaning of a word'? As any university professor who has marked term papers will attest, many of us occasionally use words in a way that is not quite consistent with their actual meaning. In such cases, we think we know a word, but we don't really know what it means.

A second complication is that it is not totally obvious what we should count when we reckon vocabulary size. Although the word cats is a different word than the word cat, it might seem unreasonable to count both words when we are counting vocabulary, since it essentially means that all nouns will be counted twice, once in their singular and once in their plural form. The same problem arises for many other words. What about verbs? Should we count meow, meows, meowing, and meowed as four different words? What about catlike, catfight, catwoman, and cat-lover? Since English is so productive (allows us to so easily make up words by glomming together words and morphemes, subparts of words like the pluralizing 's'), it gets even more confusing when we start considering words that might not yet really exist but easily could if we wanted them to: catfest, catboy, catless, cattishness, catliest, catfree, and so on. A native English speaker will have no trouble understanding the following (totally untrue) sentence: I used to be so cattish that I held my own catfest, but now I am catfree.

The third complication is a little more subtle, and hangs on the meaning of the term 'the average person'. In an insightful paper published a couple of years ago (Ramscar, Hendrix, Shaoul, Milin, & Baayen, 2014), researchers from Tubingen University in Germany argued (among other things) that it was very difficult to measure an adult's vocabulary with any reliability. Assume, reasonably, that there are a number of words that more or less everyone of the same educational level and age all know. If we test people only on those words, those people will (by the assumption) all show the same vocabulary size. The problem arises when we go beyond that common vocabulary to see who has the largest vocabulary outside of that core set of words. Ramscar et al. argued (and demonstrated, with a computational simulation) that the additional (by definition, infrequent) words people would know on top of the common vocabulary are likely to be idiosyncratic, varying according to the particular interests and experiences of the individuals. A non-physician musician might know many words that a non-musician physician does not, and vice versa. They wrote: "Because the way words are distributed in language means that most of an adult's vocabulary comprises low-frequency types ([...] highly familiar to some people; rare to unknown to others), [...] the assumption that one can infer an accurate estimate of the size of the vocabulary of an adult native speaker of a language from a small sample of the words that the person knows is mistaken". Essentially, the only fair way to assess the true vocabulary size of adults (i.e. of those who have mastered the common core vocabulary) would be to give a test that covered all of the possible idiosyncratic vocabularies, which is impossible since it would require vocabulary tests composed of tens of thousands of words, most of which would be unknown to any particular person.

So, is it just impossible to say how many words the average person knows? No. It is possible, as long as you define your terms and gather a lot of data. A recent paper (Brysbaert, Stevens, Mandera, and Keuleers, 2016) made a very careful assessment of vocabulary size. To address the first complication (What does it mean to know a word?), they used the ability to recognize a word as their criterion, by asking many people (221,268 people, to be exact) to make decisions about whether strings were a word or a nonword. To address the second issue (What counts as a word?), they focused on lemmas, which are words in their 'citation form', essentially those that appear in a dictionary as headwords. A dictionary will list cat, but not cats; run, but not running; and so on. If this seems problematic to you, you are right. Brysbaert et al. mention (among other attempts to identify all English lemmas) Goulden et al's (1990) analysis of the 208,000 entries in the (1961) Websters Third New International Dictionary. That analysis was able to identify 54,000 lemmas as base words, 64,000 as derived words (variants of a base word that had their own entry), and 67,000 as compound words, but also found that 22,000 of the dictionary headwords were unclassifiable. Nevertheless, Brysbaert et al. settled on a lemma list of length 61,800. To address the third issue (What is an average person?) they presented results by age and education, which they were able to do because they had a huge sample.

And so they were able to come up with what is almost certainly the best estimate to date of vocabulary size (drumroll please): "The median score of 20-year-olds is [...] 42,000 lemmas; that of 60-year-olds [...] 48,200 lemmas." They also note that this age discrepancy suggests that we learn on average one new lemma every 2 days between the ages of 20 and 60 years.

As I hope the discussion above makes clear, 48,200 lemmas is not the same as 48,200 words, as the term is normally understood... Because they focused on lemmas specifically to address the problem of saying what a word is, Brysbaert et al. didn't speculate on how many words a person knows [1], where we define words as something like 'strings in attested use that are spelled differently'. I have guesstimated myself, informally and very roughly, that about 40% of words are lemmas, so I would guesstimate that we could multiply these lemma counts by about 2.5, and say that an average 20-year old English speaker knows about 105,000 words and an average 60-year-old English speaker knows about 120,500 words...but now I just muddying much clearer and more careful work.

[1] Update: After this was published to the blog, Marc Brysbaert properly chastised me for failing to note that their paper includes the sentence "Multiplying the number of lemmas by 1.7 gives a rough estimate of the total number of word types people understand in American English when inflections are included", with a reference to the Golden, Nation, and Read (1990) paper. He also noted that this does not include proper nouns. Without boring you with the details of how I came to my estimate of the multiplier, I will note that my estimate was made on a corpus-based dictionary that included many proper nouns, so our estimates of how to go from lemmas to words are perhaps fairly close. My apologies to the authors for mis-representing them on this point.

Brysbaert, M., Stevens, M., Mandera, P., & Keuleers, E. (2016). How Many Words Do We Know? Practical Estimates of Vocabulary Size Dependent on Word Definition, the Degree of Language Input and the Participant’s Age. Frontiers in Psychology, 7.

Goulden R., Nation I. S. P., & Read J. (1990). How large can a receptive vocabulary be? Applied Linguistics, 11, 341–363.

Ramscar, M., Hendrix, P., Shaoul, C., Milin, P., & Baayen, H. (2014). The myth of cognitive decline: Non‐linear dynamics of lifelong learning. Topics in Cognitive Science, 6(1), 5-42.

Sunday 11 September 2016

On the Narcissism of Small Differences

"The method of addition is quite charming if it involves adding to the self such things as a cat, a dog, roast pork, love of the sea or of cold showers. But the matter becomes less idyllic if a person decides to add love for communism, for the homeland, for Mussolini, for Catholicism or atheism, for fascism or antifascism. [...] Here is that strange paradox to which all people cultivating the self by way of the addition method are subject: they use addition in order to create a unique, inimitable self, yet because they automatically become propagandists for the added attributes, they are actually doing everything in their power to make as many others as possible similar to themselves; as a result, their uniqueness (so painfully gained) quickly begins to disappear. We may ask ourselves why a person who loves a cat (or Mussolini) is not satisfied to keep his love to himself and wants to force it on others. Let us seek the answer by recalling the young woman [...] who belligerently asserted that she loved cold showers. She thereby managed to differentiate herself at once from one-half of the human race, namely the half that prefers hot showers. Unfortunately, that other half now resembled her all the more. Alas, how sad! Many people, few ideas, so how are we to differentiate ourselves from one another? The young woman knew only one way of overcoming the disadvantage of her similarity to that enormous throng devoted to cold showers: she had to proclaim her credo 'I adore cold showers!' as soon as she appeared in the door of the sauna and to proclaim it with such fervor as to make the millions of other women who also enjoy cold showers seem like pale imitations of herself. Let me put it another way: a mere (simple and innocent) love for showers can become an attribute of the self only on condition that we let the world know we are ready to fight for it."The narcissism of small differences (and the unfortunate human drive for it) explains much of the insanity in the world in general, and in the current US election cycle in particular.

Subscribe to:

Posts (Atom)